Week #4 #

Project name: InnoSync #

Code repository: https://github.com/IU-Capstone-Project-2025/InnoSync

Team Members #

| Team Member | Telegram Alias | Email Address | Track | Responsibilities |

|---|---|---|---|---|

| (Lead) Ahmed Baha Eddine Alimi | @Allimi3 | a.alimi@innopolis.university | [Management/ProductDesign] | Team Management, Frontend & Design Supervision |

| Yusuf Abudghafforzoda | @abdugafforzoda | y.abudghafforzoda@innopolis.university | [Backend] | Backend Integration, Database Design and Conception |

| Egor Lazutkin | @Johnn_u | e.lazutkin@innopolis.university | [CustDev] | Customer Development & Customer Relation |

| Anvar Gilmiev | @glmvai | a.gilmiev@innopolis.university | [Frontend] | Frontend Implementation, Design Conception |

| Asgat Keruly | @east511 | a.keruly@innopolis.university | [Frontend] | Frontend Implementation, Design Conception |

| Aibek Bakirov | @bakirov817 | a.bakirov@innopolis.university | [DevOps] | Infrastructure Automation, CI/CD Setup, Deployment |

| Gurbanberdi Gulladyyev | @gurbangg | g.gulladyyev@innopolis.university | [ML] | ML Model Research & Development for QuickSyncing |

🎥 New MVP Demonstration #

📁 Google Drive Link to MVP Demo Video #

🧪 Testing and QA #

Summary of testing strategy and types of tests implemented #

Our testing strategy focuses on ensuring functionality at both the unit and integration levels. This week, we implemented the following:

Unit Tests:

- Developed using JUnit 5 to validate isolated components and business logic.

- Covered core methods in services and utility classes.

Integration Tests:

- Used Mockito to mock dependencies where needed.

- Verified end-to-end behavior between services, repositories, and the database layer.

Test Coverage Strategy:

- Focused on critical logic paths and potential edge cases.

- Aimed to detect regressions early during development and integration phases.

Tools used:

JUnit 5for testing frameworkMockitofor mocking and verifying interactions- No static analysis tools were used during this phase.

Evidence of test execution #

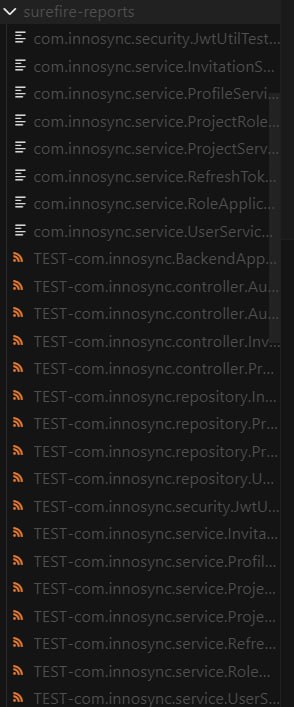

1. Test Report Structure #

This image displays the Surefire test report structure within the project directory. It showcases test class coverage across the following layers:

- Service layer

- Controller layer

- Repository layer

- Security layer

This layout demonstrates comprehensive modular testing implemented using JUnit.

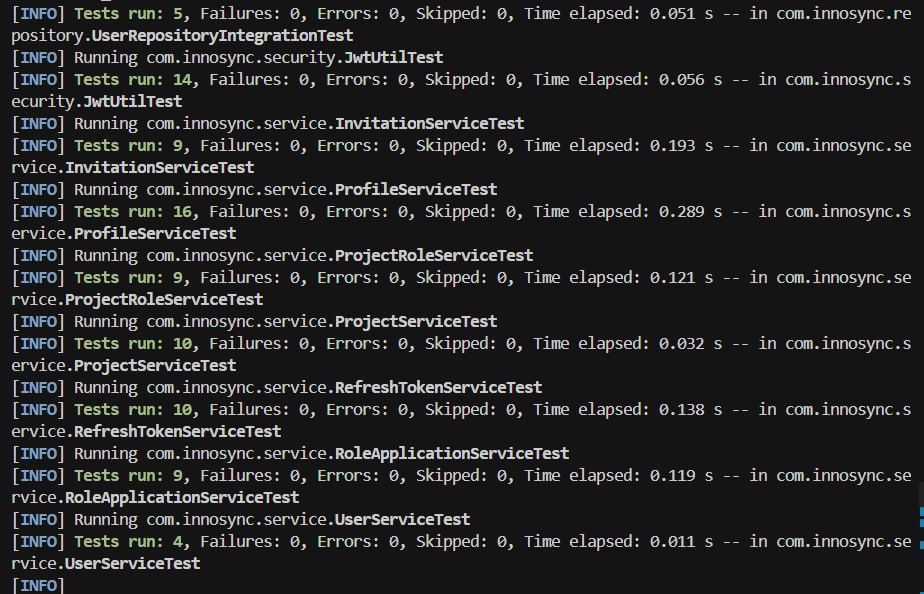

2. Detailed Execution Logs of Service Tests #

This screenshot shows detailed execution logs for individual service tests, including but not limited to:

JwtUtilTestProfileServiceTestRefreshTokenServiceTest

All tests have executed successfully with zero failures or errors, indicating the correctness and stability of the core business logic.

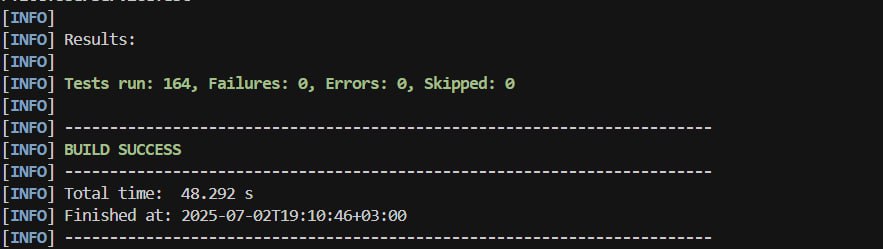

3. Test Summary #

This final image confirms:

- Completion of 164 test cases

- No failures, errors, or skipped tests

Code Coverage Report #

For a detailed view of the code coverage, please refer to the report View Docs.

CI/CD #

We implemented a CI/CD pipeline using GitHub Actions to automate testing and deployment.

- CI (Continuous Integration) runs backend and frontend tests on every push or pull_request to main or develop.

- CD (Continuous Deployment) is triggered only on

mainafter tests pass. It securely connects to our Yandex Cloud VDS via SSH and runs docker compose up -d –build to deploy the new version.

Tools used #

- GitHub Actions

- SSH deployment via appleboy/ssh-action

- Docker & Docker Compose

- Yandex Cloud VM (VDS)

Challenges faced #

- Git remote conflict when tracking long nested branches (resolved with git remote prune origin)

- SSH deployment required manual key generation and GitHub secrets configuration

- Ensuring proper test environments across both backend (Java 21, Maven) and frontend (Node.js, Next.js)

- Couldn’t find domain name for free, this task in progress yet, to get domain name for free.

Links to CI/CD configuration files #

Deployment #

Staging #

The application is deployed on a Yandex Cloud virtual server (VDS). The server hosts both frontend and backend containers using Docker Compose.

- Backend: Spring Boot (Java 21) running on port 8080

- Frontend: Next.js app on port 3000

- Reverse proxy: NGINX to handle routing and public access

- CI/CD: Deploys automatically from GitHub on every main push

Public access is available via the server’s public IP address View Here . Domain configuration is in progress, because we couldn’t find it for free yet.

Production #

⚠️ Bonus not yet implemented

Plans for future:

- Assign a custom domain name

- Add HTTPS using Let’s Encrypt and Certbot

- Separate staging and production environments on different ports or VMs

- Use Docker and NGINX for robust deployment

This section will be updated in a future report.

Vibe Check #

- Held a dedicated team sync to assess workload, morale, and communication.

- Overall team mood is positive — members reported strong collaboration and task ownership.

- Main challenge raised: occasional backend-frontend sync delays during integration.

- Agreed on better scheduling of integration checkpoints to avoid blockers.

- Everyone feels aligned with the project goals and comfortable voicing concerns.

- Committed to maintaining transparent, supportive team dynamics.

Machine Learning #

Discussed the integration with the backend and updated the data models for both projects and candidates to align with the database schema. Additionally, the ML model application was dockerized to streamline deployment and ensure consistency across environments.

Product Design #

- Refined UI for Dashboard, Find Talents, and Find Projects based on feedback.

- Integrated user feedback from usability tests into Figma updates.

- Maintained alignment between design and code using Cursor + Figma MCP.

- Researched and did QuickSync Design Layout.

CustDev #

- Prioritized user hypotheses for testing and interviews.

- Collected early feedback on project/talent discovery flow.

- Analyzed user expectations around filtering and profile setup.

- Updated documentation for upcoming validation sessions.

AI Agents Utilization: Cursor with Model Context Protocols (MCP) #

Overview #

During this week, we utilized Cursor in conjunction with Model Context Protocols (MCP) to implement several features and tasks — primarily on the frontend.

We specifically used the Figma Context MCP, a server designed for Cursor. This MCP simplifies and translates Figma API responses into model-relevant layout and styling data, allowing Cursor to generate the structural HTML/CSS for a page directly from Figma designs.

Features and Tasks Completed with Cursor #

- Implementation of the Dashboard Page

- Implementation of the Find Project Page

- Implementation of the Find Talents Page

- Responsiveness implementation for the Login and Signout Pages

MCP Integration Workflow #

The MCP was used to extract styling and structure data from Figma. Work was conducted frame by frame to maximize the effectiveness of Cursor’s code generation. This granular approach helped in maintaining precision and consistency with the original design specifications.

Productivity Impact #

✅ Advantages #

- Facilitated straightforward design implementation: Especially effective for designs with minimal components or simpler structures.

- Accelerated repetitive frontend tasks: Tasks like setting up filtering mechanisms, button logic, and basic layout configurations were significantly faster.

- Motivational boost: Cursor’s ability to produce near-correct outputs reduced procrastination, making it easier to get started and iterate quickly.

⚠️ Disadvantages #

- Incorrect file placement: Cursor occasionally placed correct code into the wrong files. If unnoticed early, these issues became hard to track and resolve.

- Poor adherence to coding conventions: Solutions sometimes worked technically but did not align with best practices or established code organization guidelines.

- Duplicate or unnecessary component creation: Cursor occasionally introduced redundant components or modified unrelated files, potentially causing long-term codebase conflicts.

- Limited maintainability: While the generated code often worked, it lacked flexibility and was sometimes hard to refactor or extend later.

Productivity Metrics #

Responsiveness & Filtering Features:

Cursor provided a 20–25% productivity boost, significantly reducing development time for UI logic and responsiveness.Complex Page Implementations (Dashboard, Find Talents, Find Project):

Cursor contributed a 5–10% development boost. These pages contained numerous components and complex interactions, where Cursor often struggled to accurately replicate the designs. Multiple iterations, developer intervention, and manual refactoring were required to complete the tasks to standard.

Summary #

Using Cursor with the Figma MCP delivered a noticeable productivity increase for isolated, straightforward frontend tasks. However, for more complex pages and scalable features, developer supervision and involvement remained critical to ensure maintainable, clean, and well-structured code.

Weekly commitments #

Ahmed Baha Eddine Alimi:

- Updated Issues in Github to ensure that their status are up to date with our progress View

- Implemented the design for Dashboard Page with Layout for (Projects, Invitations, Proposals, Chats, Profile panel) Sections View Commit

- Implemented/Enhanced the design for Find Projects and Implemented the filtering logic for it View Commit

- Implemented/Enhanced the design for Find Talents and Implemented the filtering logic for it View Commit

- Implemented/Enhanced the navbar styling and Implemented the logic for login/logout and routing for it with dropdown list for available actions View Commit

- Reviewed and merged pull requests in the frontend side View Commit

- Conducted Vibe Check with the team, collected their feedback and discussed the overall working environment View Docs

- Reviewed and updated the figma design regarding the last usability testing and internal demo View Design

- Wrote the report

Yusuf Abudghafforzoda:

- Collaborated with frontend team to make endpoints connection

- Adjusted APIs in the connection process View Commit

- Wrote Unit tests for all logic-side of the project View Commit

- Wrote Integration tests for Controllers, Repositories, and for connection with Database View Commit

Egor Lazutkin:

- Did the Formulation and prioritisation of hypotheses for preparation for user testing View Docs

Asgat Keruly:

- Endpoint Connection for Logout View Commit

- JWT Token Logic View Commit

- Profile Panel Endpoint Connection View Commit

- Routing for SignUp and Login to dashboard View Commit

- Available projects fetching and representation in the frontend View Commit

- Code Coverage Report & Screenshots for unit Tests View Docs

- Profile initition endpoint connection View Commit

Anvar Gilmiev:

- Project Creation Endpoint Connection View Commit

- Connected experience level to profile creation View Commit

- Available Talents fetching and representation in the frontend

- Designed QuickSync UI View Design

Aibek Bakirov:

- CI/CD pipeline setup View Commit

- Enivronment Setup View Commit

- Deployed full backend & frontend on a server View Link

Gurbanberdi Gulladyyev:

- Discussed integration with backend

- Changed data model for projects and candidates to match database View Commit

- Dockerized ML model app View Commit

Plan for Next Week #

Frontend #

- Integrate QuickSync in Projects Dashboard

- Finalize Chats, Invitations, Profile Edit, and Projects pages

- Add loading states and error handling

- Refactor components for clarity

- Add basic end-to-end tests

Backend #

- Finalize endpoints: Invitations, Chats, Profile Edit

- Connect backend with ML QuickSync service

- Add integration tests for matching flow

- Improve validation and error handling

Machine Learning #

- Finish Docker setup for ML inference

- Finalize API for user matching

- Optimize embedding speed

- Test responses with frontend

DevOps #

- Add ML service to Docker Compose

- Add health checks to all services

- Improve GitHub Actions for build/test

CustDev #

- Collect feedback from early users

- Validate matching results and onboarding flow

- Plan final feedback survey

Product & Design #

- Finalize UI for QuickSync and Profile Edit

- Review product flow across mobile & desktop

- Support usability testing

Confirmation of the code’s operability #

We confirm that the code in the main branch:

- [✔] In working condition.

- [✔] Run via docker-compose (or another alternative described in the

README.md).